Get 93% OFF on Lifetime

Exclusive Deal

Don’t miss out this deal, it comes with Password Manager FREE of cost.

Get 93% off on FastestVPN and avail PassHulk Password Manager FREE

Get This Deal Now!By Nick Anderson No Comments 6 minutes

Apple has recently announced two features that will be coming as part of a future iOS update. The two features have been met with much criticism from privacy advocates. Apple remains firm in its conviction that its approach does not violate the privacy of users.

Let us discuss what Child Sexual Abuse Material (CSAM) is and how Apple is tackling it, causing backlash from privacy experts worldwide.

Child Sexual Abuse Material (CSAM) is anything that contains sexual material related to underage children. CSAM detection is a technology that finds such content by matching it against a known CSAM database.

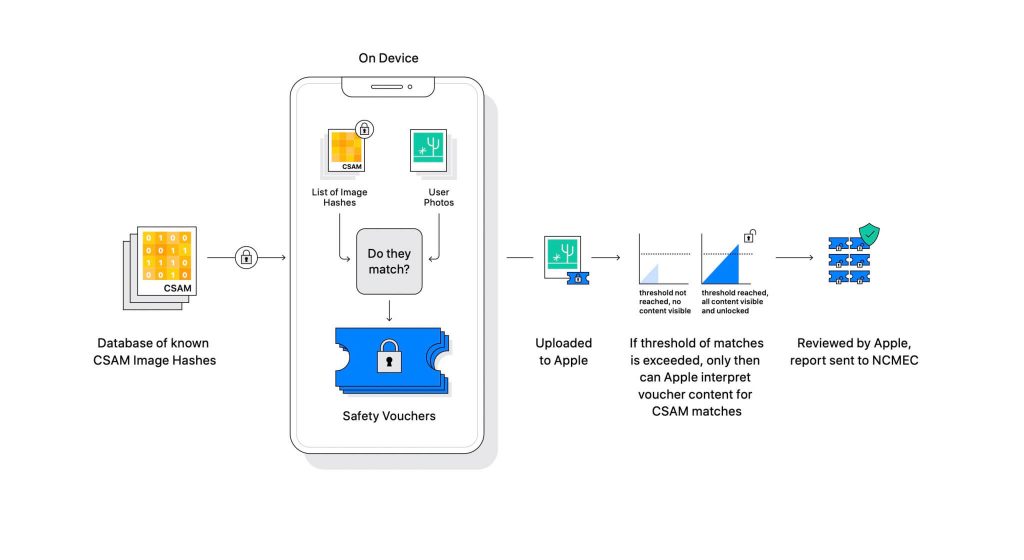

Apple revealed the technology as part of an upcoming software update. It works by comparing the photos users upload on iCloud against a known CSAM database maintained by the National Center for Missing Exploited Children (NCMEC) and other child safety organizations. But scanning the pictures would be a huge breach of privacy, so instead, Apple’s technology called NeuralHash matches hashes of the images against the hashes provided by NCMEC.

The database of known CSAM hashes will be stored on every device. The matching process is completely cryptographic in nature. Apple says that its cryptography technology creates a secure voucher when a match is found and stores the result. The image and the voucher get uploaded to iCloud if the user has enabled iCloud Photos sharing.

Apple created another mechanism that allows the voucher to stay secure against any intervention, including Apple’s. Think of it as a lock that only unlocks once it hits a certain number.

(Image credits: Apple)

Apple cannot see the image that is stored in the cryptographic voucher. But once the threshold is crossed, Apple will take a manual intervention. This part is important to ensure that the system has not produced an error. Someone from Apple will manually check the image and confirm if it really is CSAM. If the image is found to be CSAM, the user’s account will be deleted, and law enforcement agencies will be notified about the person.

Apple states that the threshold selected delivers a 1 in a trillion probability for incorrectly flagging an account.

There is no denying that CSAM is a huge problem. When end-to-end encryption was created to bolster privacy, it also provided predators safety from law enforcement. They can share such material and evade the detection of law enforcement.

One way to prevent it is to scan all images being uploaded to cloud services, such as Dropbox, OneDrive, Google Drive, iCloud. However, Apple led a privacy-focused approach that does not involve scanning of the images but instead hashes.

A hash is a cryptographic value of an input that has been passed through an algorithm. The result will be a hash value that is unique to the input, such as an image. So instead of comparing the images themselves, their hash values can be compared to determine if they are the same images or not.

All of that sounds perfect, right? Not exactly. Privacy experts argue that nothing is stopping Apple from replacing the database of CSAM with another. The new database could identify rights activists, freedom supporters, and anything that does not support a government’s narrative. In short, it could be weaponized for surveillance.

Since its announcement, Apple has been under fire from privacy experts worldwide. The technology is being described as a backdoor that authoritarian governments will eventually come to use.

While Apple commits that it will not concede to any demands from governments, the very existence of such technology has the experts worried. Privacy organizations such as Electronic Frontier Foundation (EFF) – including ex-CIA whistleblower Edward Snowden – have been vocal about the move. And as many as 90+ civil society organizations have released an open letter to Apple to abandon the release of its CSAM detection technology. The announcement has also reportedly sparked criticism inside Apple.

Interestingly, the same company once fought the FBI and federal court against creating a custom OS in the 2015 San Bernadino case. Apple cited reasons of potential misuse of the custom iOS version that can bypass iPhone’s security, even calling it “software equivalent of cancer.”

Apple was very apprehensive of creating something that had the potential for misuse. However, years down the road, Apple is seemingly contradicting its own stance on privacy. While Apple continues to say that much of the skepticism is due to confusion surrounding how it works, privacy experts have dismissed any explanation Apple has issued, including a threat model document that lays out how the system is designed to handle interference and errors.

CSAM detection system is part device and part cloud. The system works fully only when you have iCloud Photos turned on. Turning it off will disable the other half of the system, which is necessary for the detected results to reach Apple.

There is no way to turn off the on-device scanning. And Apple has made the system such

that it does not notify the user if a CSAM is detected on the device.

There are two parts to Apple’s CSAM: iCloud and Messages.

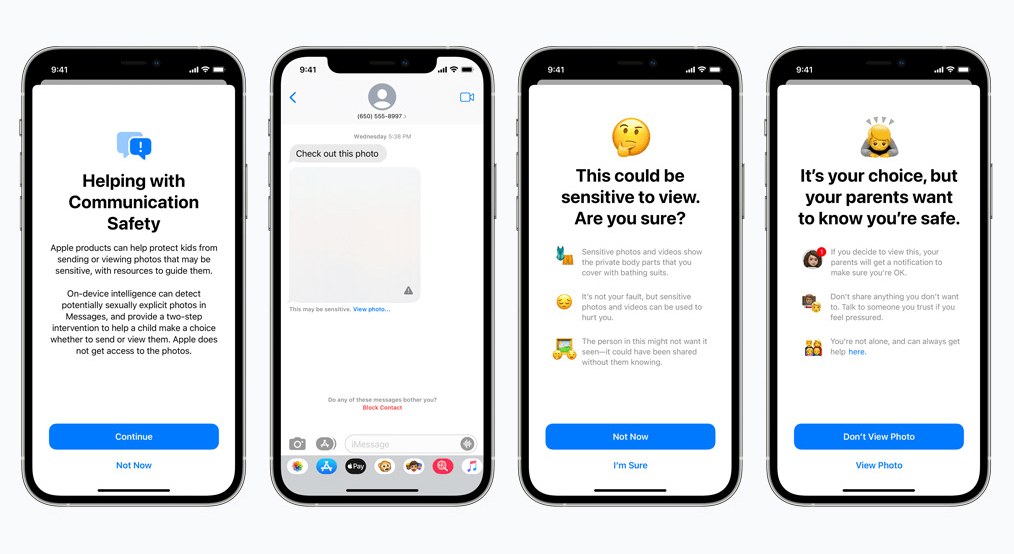

Communications safety for Messages works differently than its iCloud counterpart. It uses on-device machine learning to identify if an incoming or outgoing message contains sexually explicit photos. Parents will be able to enable the feature for children on their iCloud Family account.

The photo will be blurred if it is sexually explicit in nature and marked as sensitive. If the user proceeds to view the photo anyway, the parent will receive a notification about it. This also works when a child is trying to send a photo that has been marked sexually explicit.

In a recent development, Apple announced its plans to delay the rollout of CSAM until later.

We, as privacy advocates, strongly believe that if tools exist, they can be manipulated for ill purposes. There are billions of iPhones and iPads in the world right now; implementing a technology that can be coercively altered to fit a purpose by oppressive governments is a real possibility.

© Copyright 2024 Fastest VPN - All Rights Reserved.

Don’t miss out this deal, it comes with Password Manager FREE of cost.

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.